Hi there, this is my first blog at azuretar and I’m pretty excited to share my knowledge of big data analytics using python and spark, with all of you. Hope this lesson can help people get started with big data. Happy reading.

Introduction to Big Data

Big Data is one of the biggest buzzwords that you should have heard of by now and if you haven’t, you are at the right place. You can find plenty of fancy definitions of Big data on the internet, however, I like this one from Wikipedia.

‘Big data usually includes data sets with sizes beyond the ability of commonly used software tools to capture, curate, manage, and process data within a tolerable elapsed time’

Big Data is changing the world for better and to know how, I would recommend you to go through this article. While it is easy to talk about Big Data and it’s applications, it’s equally harder to get to know how to effecitvely manage Big Data. In this article, I would try my best to cover all important Big Data concepts and demonstrate how we can use Apache Spark for big data processing.

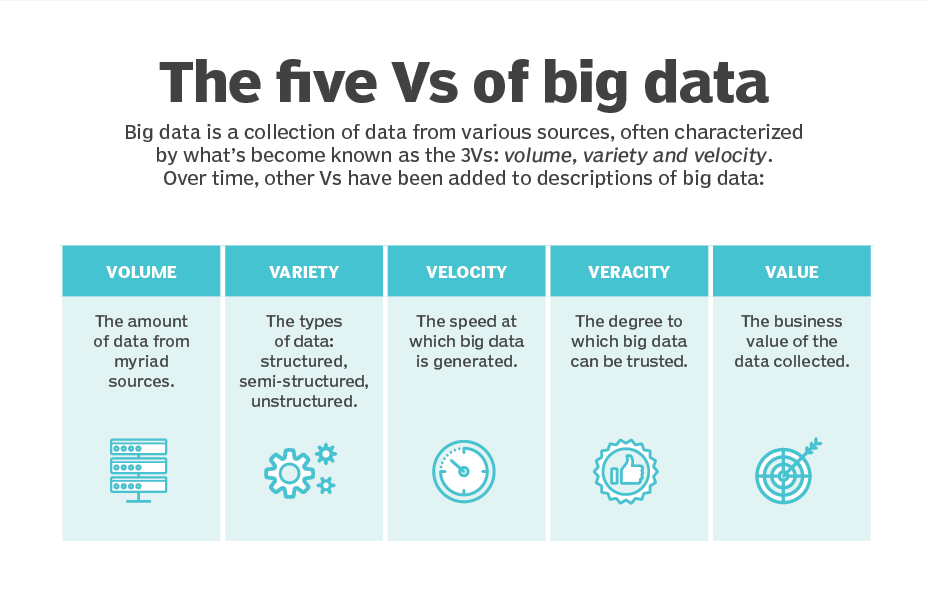

First of all, let us see the 5 main V’s that characterize Big Data (p.s. there are many other V’s that you could hunt for!).

Apache Spark

Now that we have a general idea about the V’s that characterize the big data, let’s see how Apache Spark provides a big data processing framework and helps us deal with the above ‘V’s. But first, what is Apache Spark?

Apache Spark is a fast and general engine for large-scale data processing designed to write applications quickly in Java, Scala, or Python. There are numerous applications of Spark such as streaming data manipulation (Spark streaming), machine learning (Spark MLlib), and batch processing (Hadoop integration).

One of Apache Spark’s appeals to developers has been its easy-to-use APIs (RDD and DataFrames), for operating on large datasets.

Resilient Distributed Dataset (RDD)

RDD was the primary user-facing API in Spark since its inception. It is an immutable distributed collection of elements of your data, partitioned across nodes in your cluster that can be operated in parallel with a low-level API that offers transformations and actions.

Transformations are operations on RDDs that return a new RDD, such as map() and filter().

Actions, on the other hand, are operations that return a result to the driver program or write it to storage and kick off a computation, such as count() and first().

DataFrames

Like an RDD, a DataFrame is also an immutable distributed collection of data. However, unlike an RDD, data is organized into named columns, like a table in a relational database. DataFrames are designed to make large data set processing even easier and allow developers to impose a structure onto a distributed collection of data, allowing higher-level abstraction. It also makes Spark accessible to a wider audience, beyond specialized data engineers.

Which one to use: RDDs or DataFrames ?

While RDD offers you low-level functionality and control, DataFrame allows custom view and structure, offers high-level and domain-specific operations, saves space, and executes at superior speeds. So choose your API wisely! To know more about the differences between RDDs and DataFrames, you can check out this blog.

Time to get your hands dirty!

Let’s dive into some code to see how you can set up Spark on your own machine. First things first, you need to make sure you have Java and pyspark installed on your machine. This tutorial shows you how to do that. Once you are done with this, the next step (which is not mandatory but I certainly recommend) is to install Jupyter notebook for python (follow this link). Okay, now you just need to open a jupyter notebook with Python 3 kernel and follow the steps below.

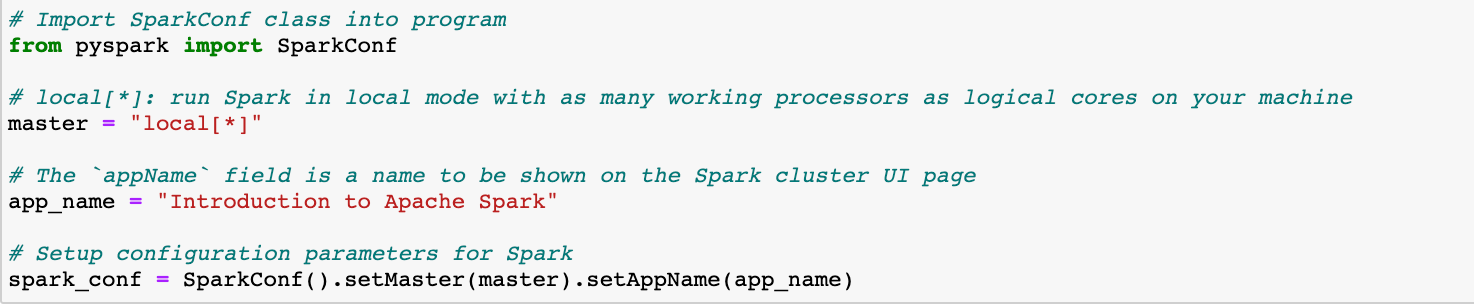

1. Spark Configuration

To run a Spark application on the local/cluster, a few configurations and parameters need to be set, for this, SparkConf provides configurations to run a Spark application.

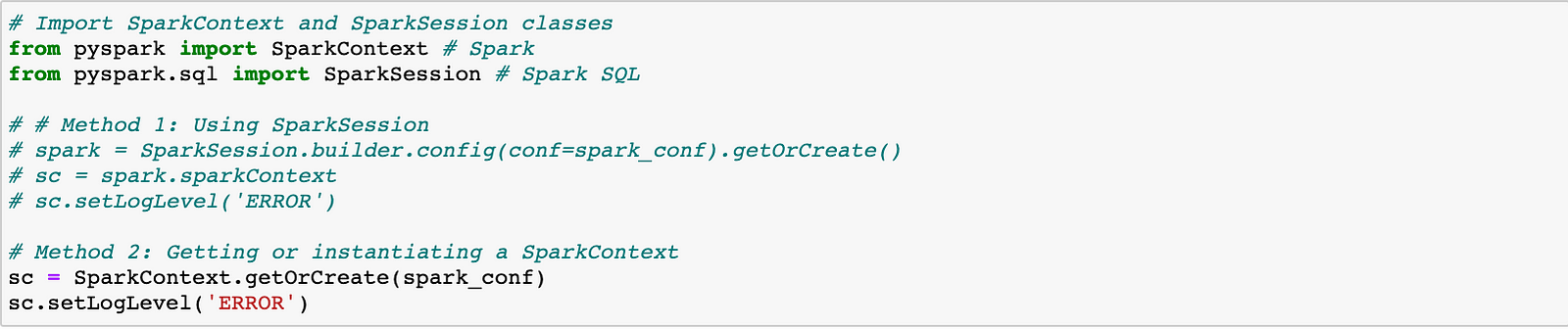

2. SparkContext and SparkSession

Using pyspark, we can initialise Spark, create RDD from the data, sort, filter, and sample the data. Especially, we will use and import SparkContext from pyspark, which is the main entry point for Spark Core functionality. The SparkSession object provides methods used to create DataFrames from various input sources. There are two ways to create a SparkContext instance; one using SparkContext directly and the other using a SparkSession object. Below, I’ve shown both the ways (commented one of them) to do so.

3. Playing with RDDs

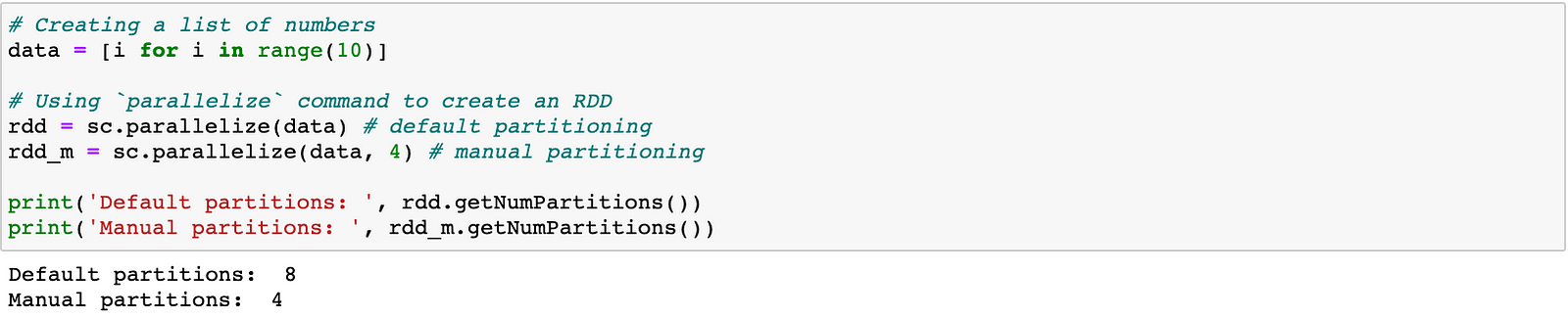

Now that we have set up SparkContext instance, we can play around with RDDs to see why they are effective in parallelizing data operations. Here, another thing to notice is that we instantiated SparkContext rather than using SparkSession because we will be playing with RDDs. In the upcoming section, we would use SparkSession when dealing with DataFrames.

There are many ways to parallelize data using RDDs, two of which I have shown below. One way is to use the parallelize function to convert an iterable such as set, list, tuple to an RDD.

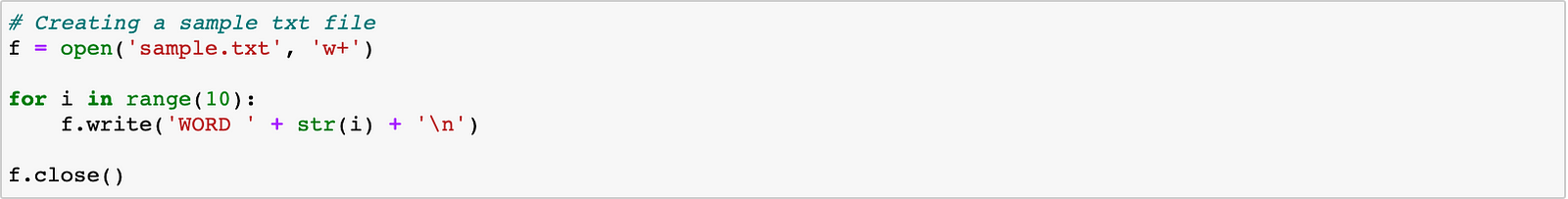

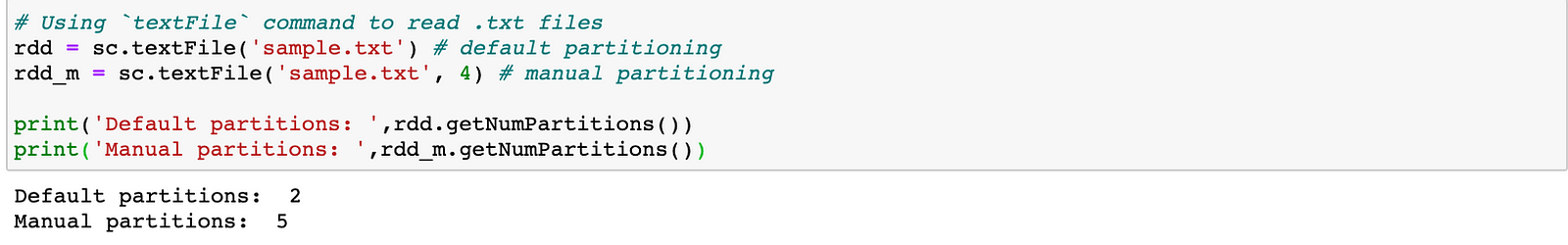

Another way is to use the textFile function to read a text file and convert the contents to an RDD.

For more on RDDs, I would recommend you this exercise to get a hands-on practice. This cheatsheet on RDDs in Python would also help you in the long run (trust me).

4. Playing with DataFrames

To work with Data Frames in Spark, we need to instantiate the Spark Context using SparkSession. So, in the above snippet where we created a SparkContext instance, we need to use Method 1 instead of Method 2. Once we are done with that, we now have SparkSession up and running, which provides two methods for creating Data Frames, as shown below.

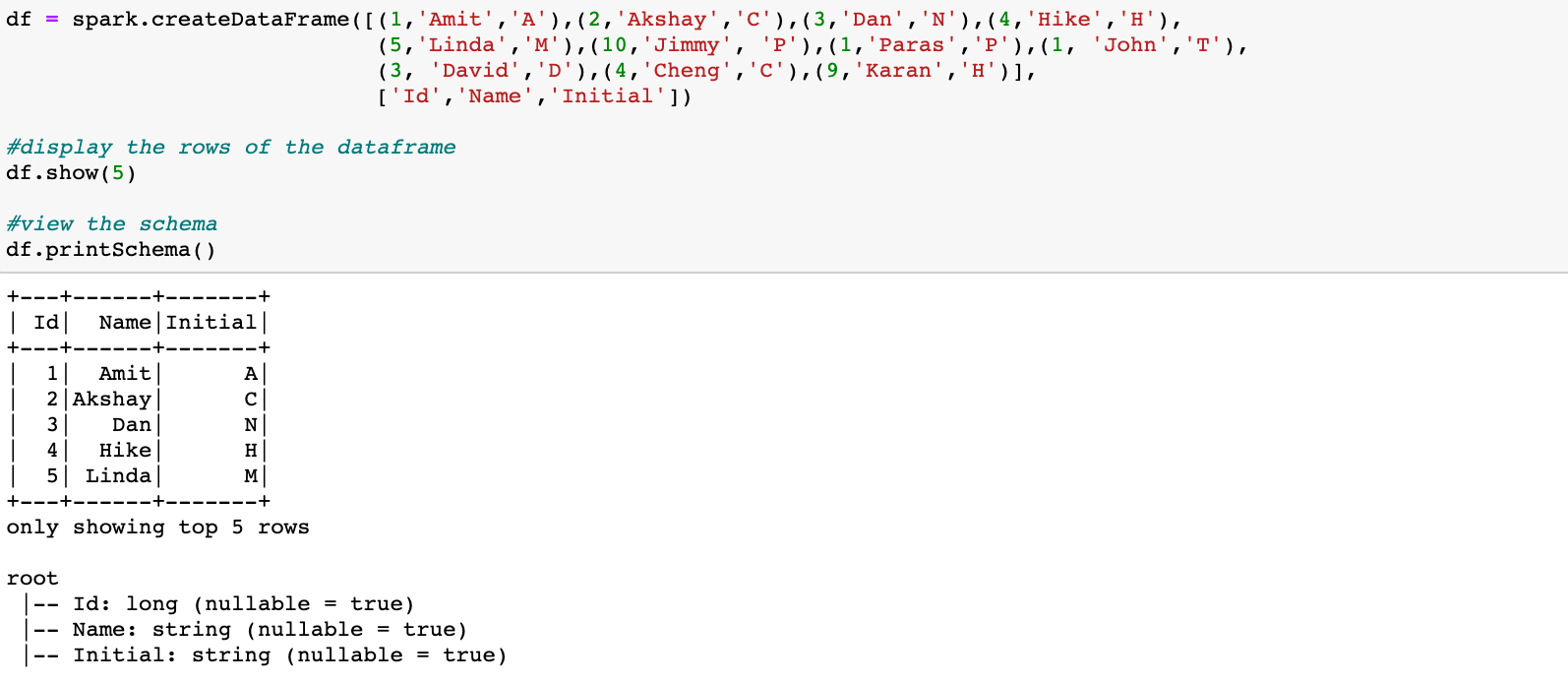

One way to create DataFrames is to use the method createDataFrame, as shown below.

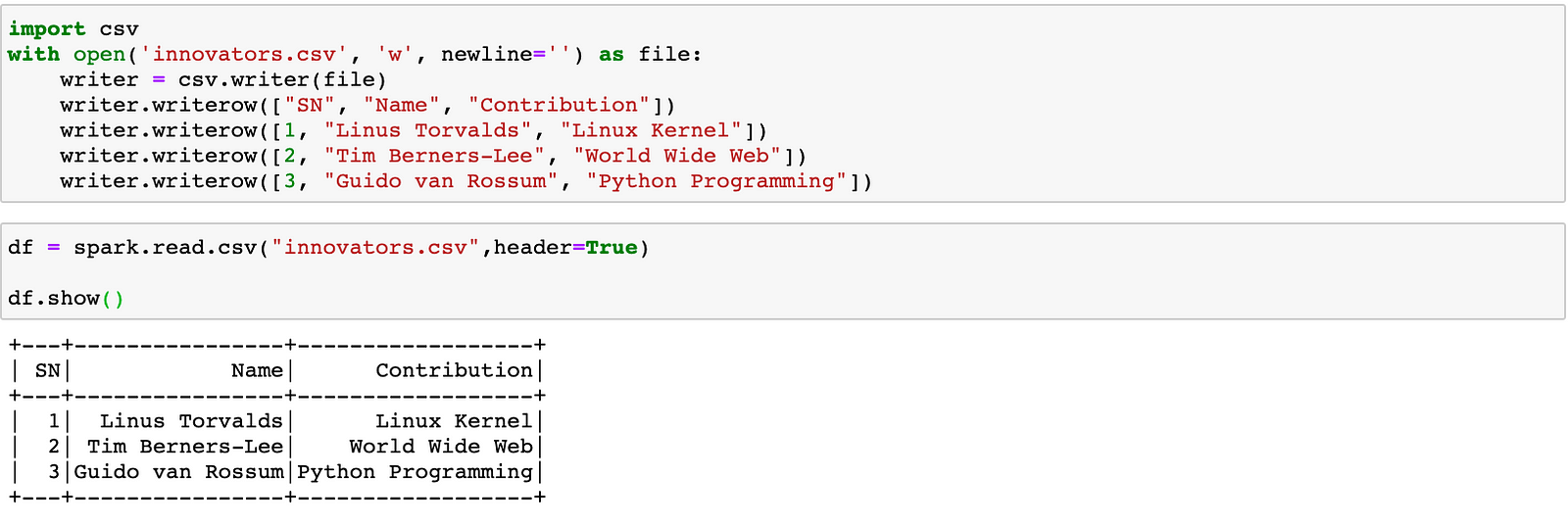

Another way to create a DataFrame is to use the read.csv method to load the data from csv to a DataFrame, as shown below.

There are a lot of partitioning strategies used in pyspark to partition a DataFrame/RDD but I would skip that part because the important thing you need to know is that the default strategy used by pyspark is the most effecient one. Also, Spark has a special feature called Catalyst Optimizer which optimizes structural queries expressed in SQL, or via the DataFrame/Dataset APIs, which can reduce the runtime of programs and save costs.

Now, that we have a DataFrame to work on, we can make use of a large number of methods, such as columns, select, count, describe, filter, etc, which I would try to demonstrate through the following Machine Learning use case.

Machine Learning in Spark using MLlib

As discussed earlier, MLlib is Apache Spark’s scalable machine learning library and it’s goal is to make practical machine learning scalable and easy. MLlib allows for preprocessing, munging, training of models, and making predictions at scale on data. In the following section, we are going to use Spark for Machine Learning on an Adult Income dataset taken from the UCI repository.

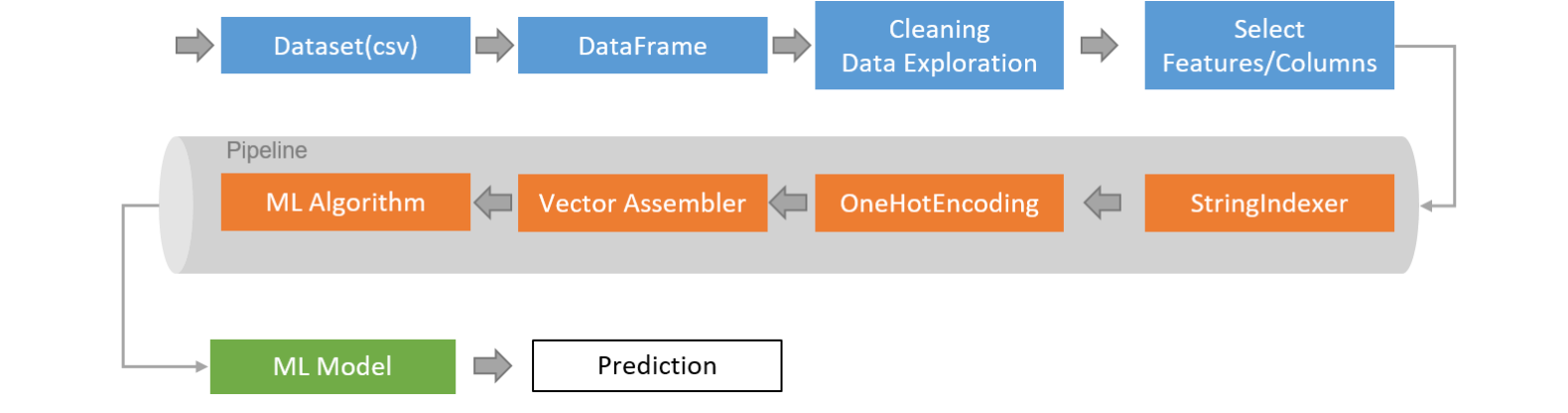

The following image shows a sample flow of the ML approach to be followed.

Leaving aside the first four stages in the above image, we are mainly interested in the pipeline API. MLlib standardizes APIs for machine learning algorithms to make it easier to combine multiple algorithms into a single pipeline, or workflow. Before we dive deep into pipelines, it is fair to discuss the components of a pipeline namely Transformers and Estimators. A Transformer is an abstraction that includes feature transformers and learned models whereas an Estimator abstracts the concept of a learning algorithm or any algorithm that fits or trains on data. In the pipeline shown above, StringIndexer, OneHotEncoder and VectorAssembler are transformers while the ML Algorithm is an estimator.

Now, in the classification task, after data preprocessing, we first declare the 4 stages of the pipeline and then plugin these stages into a pipeline API and create an ML model for Logistic Regression. You can find the code for this in the following github repository.

Summary

This article discussed a high-level overview of Big Data and the power of Apache Spark in handling the big data. Some big data terminologies were also discussed with hands-on coding. Congrats on reading through this article and this is just the tip of the iceberg as you shouldn’t expect a term like Big Data to be covered in such a small article! There are many other things that I shall cover in the coming days such as Spark Streaming, Apache Kafka and many more, so stay tuned!

References

- https://spark.apache.org/docs/latest/ml-pipeline.html#transformers

- https://databricks.com/glossary/what-is-machine-learning-library

- https://en.wikipedia.org/wiki/Big_data

- https://searchcloudcomputing.techtarget.com/tip/Cost-implications-of-the-5-Vs-of-big-data

- https://spark.apache.org/docs/0.9.1/python-programming-guide.html